Claude Opus 4.5 in Practice: Strava Analytics App from a Developer's Perspective

I had Claude Opus 4.5 build a Strava analytics app. My experience as a senior developer: How does the LLM perform in practice? An honest assessment of code quality and the development process.

Can Opus 4.5 really create complete apps without iteration? The hype is real, and so is my skepticism as a developer. To put it to the test, I had Opus 4.5 build a web app that summarizes and visualizes my 2025 Strava data—after Strava put the year-in-review feature behind a paywall. But that's a different story.

TL;DR

After a structured planning phase, Opus 4.5 delivered a working prototype in just a few hours. Impressive. However: OAuth flow was incorrectly implemented, wrong year calculated, code quality could be better. The model got me about 80% of the way—the remaining 20% required classic debugging and manual fixes. For simple greenfield projects, vibe coding is a productivity boost, but without experienced developers validating and refining the output, it remains a tool with limitations.

Coding with LLM/AI

Since the big AI boom, I've been using LLMs as tools in software development: in my IDE (VSCode + Cursor), in web apps (ChatGPT, Claude), and in the command line (Claude Code CLI). I've continuously followed the developments, integrated MCP servers (e.g., Context7 for documentation), created agents, and written system prompts to solve context-specific problems.

My experience: These tools are extremely helpful for discussing ideas or developing concepts—especially in new environments or with unfamiliar frameworks. LLMs have positively changed how I work and enable me to develop features faster and more precisely.

However, I've never let AI blindly commit code. In more complex projects and monorepos where multiple modules interact, LLMs rarely understood all the connections. In those situations, I had to build specific context and discuss that exact vertical—LLM as rubber duck, not as autonomous developer.

But perhaps it's time to question this view. The speed at which this field is moving forward is remarkable. If you believe the voices in our industry, we're just a stone's throw away from AGI. I'm skeptical about the latter—but still curious about what the medium-term future holds for software developers.

The Experiment

The goal: Create a web app that summarizes and visualizes Strava activities from 2025. The background: Strava put the year-in-review behind a paywall—only premium members get this information. Apple doesn't offer a yearly overview of all activities either. So: problem identified, solution needed.

For development, I used Claude Code in the terminal with Opus 4.5 on my Pro plan.

Create a Plan

In planning mode, I discussed the task and approach with Opus: OAuth authorization, relevant Strava APIs, loading activity data. After user authorization, their activities should be read and key metrics (type, date, distance, duration, elevation gain) presented numerically and graphically.

Technologically, I had one requirement: Frontend and backend in TypeScript. I deliberately left the choice of frameworks open. The model was to save the plan in Markdown format so development could continue with the right context. The plan was also to be divided into phases—implementing step by step rather than letting the model run wild with too much freedom.

Conclusion: Planning went surprisingly smoothly. Many decisions were correctly planned from the start; only some details needed further refinement. Even without the subsequent vibe coding step, this is a perfectly sensible use of LLMs.

Vibe Coding

After completing the plan, I switched to Claude Code's auto-accept mode and implemented all eight phases step by step, each with a single prompt: implement phase X. Since Claude Code can compile the project at any time and read error messages, it was possible to implement all phases with minimal input.

Conclusion: Impressive at first glance. The app worked for the most part: user authorized, Strava data loaded, activities summarized and visualized. However, by exclusively using the expensive Opus 4.5 model, I had reached my Pro plan's daily limit at this point.

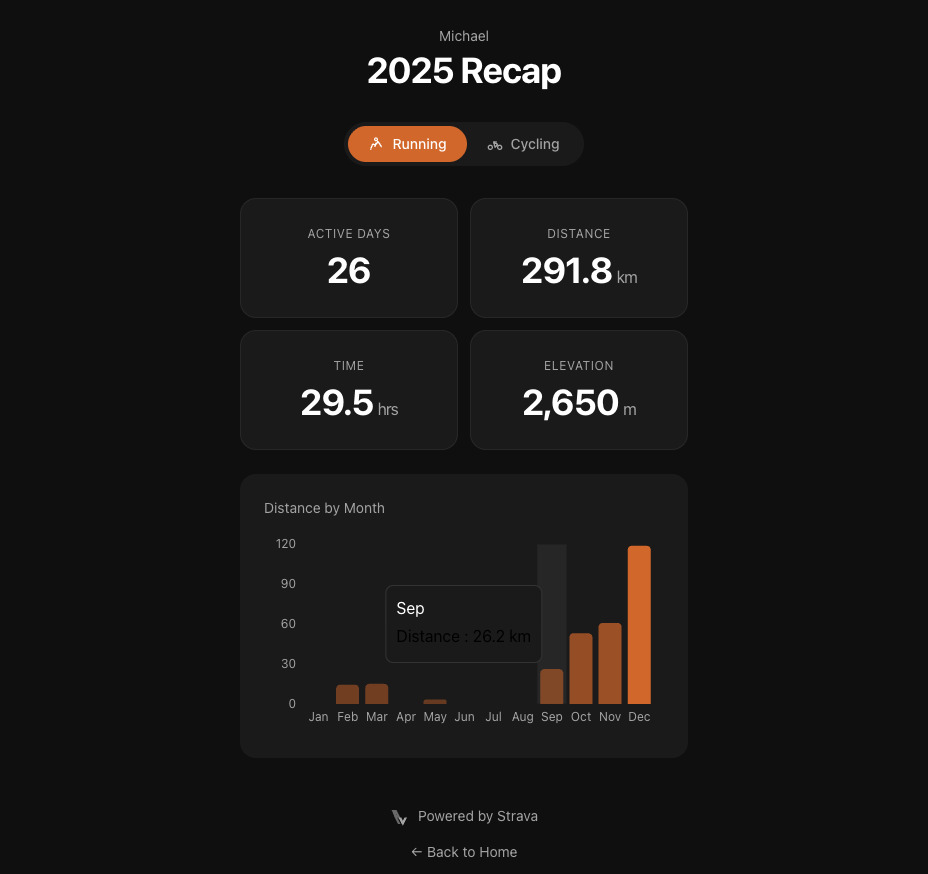

Here's the result of the first vibe coding iteration:

Web app after the first vibe coding iteration

Bug Fixing and Features

Upon closer inspection, problems emerged:

OAuth flow broken: Authorization happened on every app start, even though it should only be needed once. The refresh token was completely ignored—despite having Strava API documentation in context. Opus was able to implement the correct flow after additional prompts.

Strava Scope insufficient: The Strava authorization needed 'read_all' scope, because with the model's 'read' scope, some activities were missing.

Wrong year: The time window was calculated for 2024 instead of 2025 because the model believed we were at the beginning of 2025. I had recently experienced this phenomenon with Claude Sonnet as well—apparently a recurring issue with time awareness.

UI issues: The distance display only showed exact kilometer values on hover, with poor color contrast (dark on dark). The presentation required additional prompts.

Feature adjustment: Cycling isn't relevant to me, so I had it removed and instead split running into "Street" and "Trail." More prompting, and the next daily limit reached.

Final version of the web app

Code Review

A look under the hood revealed further weaknesses:

Tech stack: React + Next.js with Server Components + Tailwind CSS. The classic LLM default stack—functional, but not particularly creative.

Magic strings: Terms like "Trail" or "Street" were hardcoded instead of stored in a reusable dictionary. Maintainability? Nonexistent.

Dead code: The chart component contained code for an accumulated display of Street and Trail that was never used. A prop was always true because the corresponding use case didn't even exist.

Unused components: The model created in total 5 React components, from which 3 were not used at all.

Linting ignored: Although ESLint was installed and a corresponding script existed, there were unused variables and <a> tags instead of Next.js's <Link/> component.

React errors: A classic beginner mistake—setState was called synchronously inside an effect, which can lead to cascading renders.

CSS modularity: Barely any classes, almost everything hard-coded in Tailwind.

Secrets: API credentials were stored in the .env file, as defined in the planning. Here, the model adhered to the security requirements.

Summary

Opus 4.5 impressed me. The autonomy with which the model implemented all phases was remarkable. For a first prototype, vibe coding delivered a one-shot solution that fundamentally worked. The model got me about 80% of the way—and in a fraction of the time I would have needed manually.

But the details weren't right. The plan specified loading data from Strava only when not already cached (with rate limits in mind). In the implementation, however, data was reloaded every time, even though localStorage caching existed. Add to that: no refactoring after feature changes, mediocre code quality, ignored linting errors.

Important to note: This experiment took place under optimal conditions—a simple greenfield app without legacy code or complexity. This should be the easiest use case for LLM coding. With complex legacy applications, I fear that vibe coding might not only bring little benefit but could even have negative effects.

The Claude Pro plan is only suitable for trying out Opus 4.5, by the way. Anyone who wants to work with it seriously needs at least the Max plan at $100/month.

Conclusion: AI as a Tool

LLM coding is impressive and can significantly increase developer speed and precision. But we can't fully rely on vibe coding and trust that the machine handles everything correctly. Senior engineers who understand all the connections within an app remain indispensable—especially for more complex software and architectural decisions.

AI is a tool. How it's used determines the outcome. As always, the most capable developers will rise to the top—now with a powerful new instrument in their repertoire. Fear of AI is misplaced. The better strategy: Learn when to use it and when not to.

AI is here to stay—with or without a bubble. We can't put the genie back in the bottle. So let's use it.